The AI Crash

It's coming, and I didn't even have to confirm it with ChatGPT.

OpenAI, the makers of ChatGPT and its various offshoots, is currently worth less than the lint in your pocket.

I can confidently say this, because OpenAI is set to lose around $5 billion this year thanks to huge costs in server hosting not even coming close to matching its revenue. OpenAI's most famous product, ChatGPT, costs $700,000 a day to run. Whereas the most your pocket has lost was maybe the grocery list you were supposed to fill last week.

SCOOP: OpenAI may lose $5B this year & may run out of cash in 12 months, unless they raise more $, per analysis @theinformation.

— Gary Marcus (@GaryMarcus) July 24, 2024

Investors should ask: What is their moat? Unique tech? What is their route in profitability when Meta is giving away similar tech for free? Do they… pic.twitter.com/i5EkvEFEQd

The response of Sam Altman, OpenAI's CEO, is alternately that it's not a big deal because he'll just get the UAE to invest seven trillion dollars, or maybe ChatGPT's next version will achieve sentience, or who knows, the world could just end or something. In other words, Altman really doesn't know what he's talking about, which shouldn't surprise anyone since, as Ed Zitron diligently researched, Altman's entire career has, like Seinfeld episodes, been mostly about nothing, or at least nothing achievable other than hype.

What is getting somewhat lost in all this hysteria and Silicon Valley's desperate need for something to throw money at (at least, that isn't the Donald Trump campaign), is that, well, the current iterations of what we call AI are really rather bad.

The issue is what we're calling AI - Large Language Models, or LLMs - aren't actually intelligent in any sense of the word. All an LLM does is, like the spell correcting software on your phone's text messenger, compare two bits of data to see if they should be next to one another. Except in the LLM's case, it uses the sheer brute force of all available computing power to compare all the data in the world, or at least massive terabytes thereof. Thus an LLM can, on a good day, accurately simulate knowing something. It doesn't actually KNOW things - it can recognize what a thing looks like, and it can try to make inferences of what a thing described would look like based on all the other things in the world.

It's really quite a technological marvel, don't get me wrong! And it does solve some pretty basic problems involving using computers - for example, the ability to phrase a request to a program in conversation English (or German, or Russian, or Chinese). That's not trivial at all. Language is hard!

But, it can't make basic logical inferences. It never knows anything. It recognizes things, based largely on its titanic set of reference data, but it doesn't have the ability to know whether a given thing is, say, correct.

And it never will, because it's just not built that way. You can't keep throwing wood at a pit in the ground and expect a house to magically appear. You just get an ever larger pile of wood. To actually simulate cognition - how a brain functions to store data, analyze it, and come to conclusions - is a very difficult problem in computing; it's been worked on in various forms for over 50 years. LLMs take that problem and say "what if we throw a lot of wood at the hole? I mean, a LOT of wood. I mean, literally ALL THE WOOD IN THE WORLD. Then, we'll have a house."

No, you'll just have a very large pile of wood, and a lot of people angry that you deforested the planet in your mad scheme to build a house entirely the wrong way.

So, the problem with this is that a fairly interesting use of large data and even larger computer systems has been mated with snake oil salespersons to create The Next Big Thing, which is utterly guaranteed to fall flat on its face when it's discovered not to work. Because it can't - remember, an LLM doesn't know a thing, it simulates knowing a thing. And it has no problem stating, conclusively, that it knows that thing, even when that thing is wildly wrong.

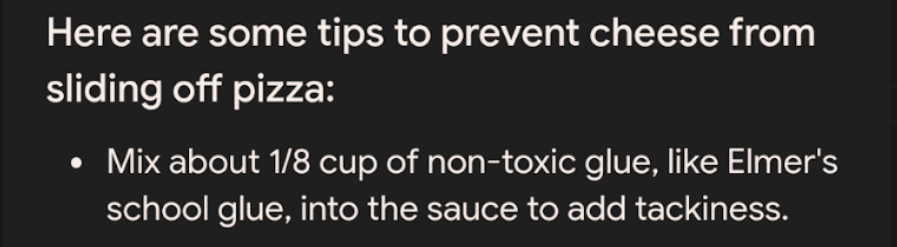

Which hasn't stopped Google from largely decapitating its own business by replacing its own search algorithms with LLM-driven results, which are frequently flat-out wrong.

You'd think Google would be a bit leery of utterly destroying its own business model in this fashion, but as Zitron explains, Google's business is no longer delivering reliable search results, but in delivering eyeballs on its own content for marketing purposes. So, enjoy your Elmer's Cheese Pizza, I suppose.

However, I have to believe that there is a hard limit to the amount of sheer failure that businesses will tolerate being injected into their work processes. Of course, it won't happen before everyone is laid off and replaced by AI that doesn't work, but that's a minor problem, really.

Managers at video game companies aren’t necessarily using AI to eliminate entire departments, but many are using it to cut corners, ramp up productivity, and compensate for attrition after layoffs. In other words, bosses are already using AI to replace and degrade jobs. The process just doesn’t always look like what you might imagine. It’s complex, based on opaque executive decisions, and the endgame is murky. It’s less Skynet and more of a mass effect—and it’s happening right now.

But in the meantime, OpenAI has to keep riding that wave and, in order to be able to afford all the wood on Earth to fill that hole, get all the investment money that exists to continue to function for another week.

Thus, SearchGPT, designed to replace Google, was recently announced. It's not yet available to the public, so I can't tell you how badly it fails. I can confidently predict, however, that it will be terrible, much like everything. And hey, it's not like Google cares much about search engines any more!

A programming note: the first draft of this was sent out via email with all the AI-generated pictures refusing to load. The irony of this has not escaped me.